Virginia Tech researchers study generative AI usage in higher education

While adoption of generative artificial intelligence is increasing in higher education and at Virginia Tech, gaps and roadblocks still exist on the way to improved integration.

Research at a glance

Context

Generative artificial intelligence (AI) is gaining ground in higher education among some groups.

Problem

This research shows how students and faculty are using AI and how an "AI divide" exists between certain users.

Solution

Researchers said policies that guide the ethical and effective use of generative AI should be implemented.

A Virginia Tech study found that while generative artificial intelligence (AI) tools such ChatGPT, Google’s Gemini, and Microsoft’s Copilot are gaining traction in higher education, significant gaps in usage and attitudes persist.

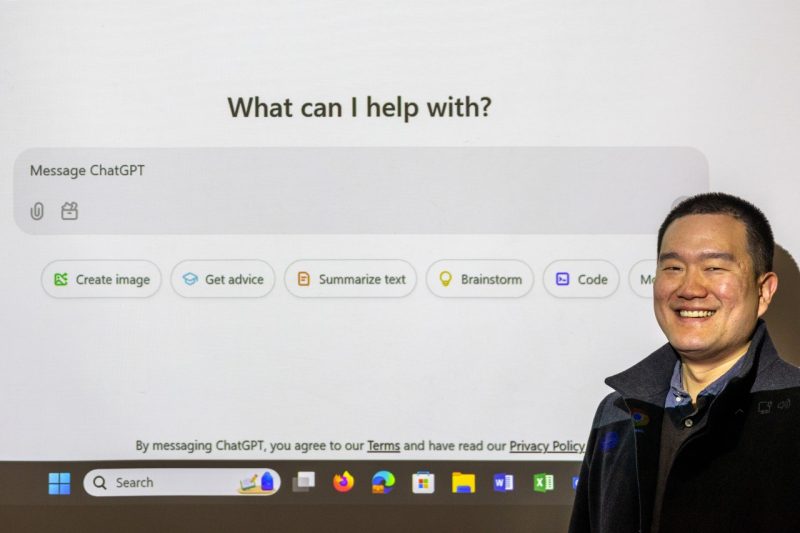

“This study provides empirical evidence about how Virginia Tech students and faculty use generative AI tools in their academic activities,” said Junghwan Kim, assistant professor in the Department of Geography and co-lead on the study. “Knowing this is crucial for creating informed policies at the university level to regulate and guide the use of AI in education. Without such data, policy decisions could lack a strong foundation.”

One of the main findings is that as of 2023, generative AI usage among students and faculty was relatively low. For instance, about 70 percent of students reported using AI tools less than once a week. Another significant finding was the difference in usage between STEM and non-STEM students. The findings show that STEM students were more comfortable and frequent users of generative AI compared to their non-STEM peers.

The study highlights an "AI divide," particularly between STEM and non-STEM students. Bridging this gap is crucial as AI skills become increasingly important. According to Kim, offering educational programs or resources to help non-STEM students feel more comfortable with AI could be beneficial.

“This research is important because it provides empirical data about the actual adoption and impact of generative AI tools in higher education, moving beyond speculation to concrete evidence that can inform policy decisions,” said Dale Pike, associate vice provost for technology-enhanced learning and co-chair of the university’s AI Working Group. “The study reveals significant disparities in AI usage and comfort levels across disciplines and demographics, highlighting potential inequities that need addressing as these tools become increasingly integral to academic work.”

This research helps identify key areas where institutions need to develop thoughtful policies and support systems to ensure AI enhances learning outcomes.

The research was published recently in Springer's Innovative Higher Education and also included Michelle Klopfer, a research assistant in engineering education at Virginia Tech.

This project grew out of collaborative efforts with Technology-enhanced Learning and Online Strategies at Virginia Tech to better understand how to integrate generative AI effectively into university practices.

Students found generative AI easier and more enjoyable to use than faculty, and STEM students are more positive about its utility compared to non-STEM students. Male STEM students are the most frequent users, while female non-STEM students use it the least.

There was a difference in perception among students and faculty, however. According to the study, students believe generative AI can enhance academic performance. Faculty, on the other hand, view academic performance more holistically and are concerned about potential over-reliance on AI affecting deeper learning.

What all groups agreed on, though, is the need for clear university policies regarding ethical use of AI in higher education.

Regarding university policy development, Virginia Tech is taking a nuanced approach to generative AI guidance that reflects the diverse ways these tools are used across academic disciplines and contexts. Rather than developing a single overarching policy, the university is carefully evaluating existing policies and working to provide specific guidance that addresses different use cases and their associated considerations.

"The rapidly evolving nature of generative AI means we need to be both thoughtful and adaptable in our approach,” Pike said. "We expect to release initial guidance this semester that will help faculty and students make informed decisions about AI use while maintaining academic integrity and promoting innovation in teaching and learning."

This guidance will build on existing academic policies while providing additional clarity around generative AI applications in areas such as research, coursework, and administrative tasks. Faculty will retain significant autonomy in determining appropriate AI use for their specific courses and disciplines, supported by university-level frameworks for ethical implementation.

“Our next steps will focus on collaborating with university leaders to develop clear, inclusive policies that guide the ethical and effective use of generative AI,” Klopfer said. “We also aim to design training programs and resources to help both students and faculty – especially those in non-STEM disciplines – gain confidence in using AI tools. By prioritizing equity and fostering AI literacy, we can ensure these technologies enhance learning for everyone without compromising critical thinking or deep understanding.”