Researchers use AI to make children safer online

Virginia Tech researchers are using artificial intelligence to build an education program to help children and teens avoid sexual predators online.

A teenager sends some explicit pictures requested by a new online love interest, only to get a blackmail threat from someone in another country.

A tween goes to meet a teen girl who bought her jewelry online, but she’s greeted by a man she doesn’t know.

From the threat of “sextortion” to cybergrooming, children and teens face a growing range of online crimes, and three Virginia Tech researchers are working to make the digital world safer for them.

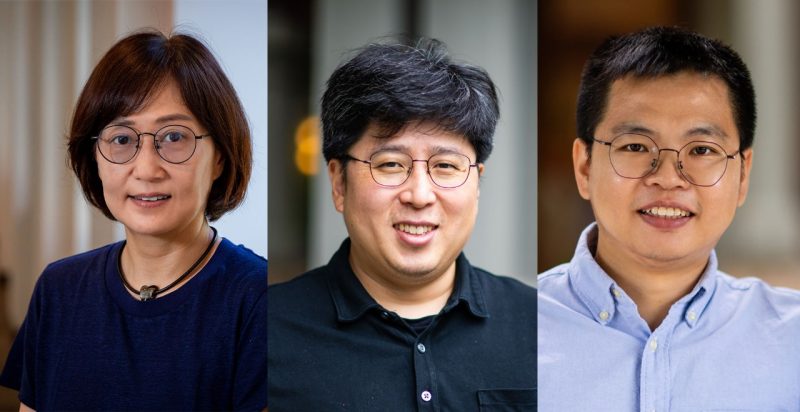

Under the auspices of a National Science Foundation (NSF) grant, Jin-Hee Cho and collaborators Lifu Huang and Sang Won Lee in the Virginia Tech Department of Computer Science want to develop a technology-assisted education program to prevent online sexual abuse of children and teens.

At a glance

- One in three Internet users worldwide is a child, according to the World Health Organization

- Grooming children for sexual abuse is a growing online threat

- Virginia Tech researchers are working to build and train chatbots powered with artificial intelligence (AI) to help children and teens identify and avoid cyber predators

Growing threats

Traditionally, cybersecurity has focused on protecting networks from disruption and devices from bad actors looking to steal data, said Jin-Hee Cho, associate professor of computer science and lead researcher on the project.

“But today, people are becoming more interested in what is called social cybersecurity,” Cho said. “We’re looking at the ways that technology and people are interacting with each other and how people are interacting through networks and devices.”

Often, those interactions are good for society, disseminating information, enabling education and communication, and fueling collaboration that leads to economic development and innovation.

But there are risks, too, especially for vulnerable groups like children and teens.

In a 2021 national survey study published in JAMA Network Open, 2,639 young adults were asked about technology-assisted sexual offenses committed against them as minors. They reported a range of predatory online contacts. Among them

- 15.6 percent reported being victims of online child sexual abuse

- 11 percent were victims of image-based sexual abuse

- 7.2 percent received non-consensual sexting

- 5.4 percent were subjected to online grooming by adults

- 3.5 percent said they were tricked into providing sexualized images and then extorted for money to keep the materials from being leaked, a crime dubbed “sextortion”

Many groups are working to make online interactions safer. In 2005, the U.S. Senate designated June as National Internet Safety Month to raise awareness of internet dangers and highlight the need for education about online safety, especially among young people.

Technology-assisted education

A lot of work in online child abuse prevention is focused on detecting perpetrators of online crimes. While that’s helpful, Cho said, it’s limited because it still leaves children and teens vulnerable to abusers.

For several years, Cho has been working to develop a project that would go further and give children tools and training to help them protect themselves from perpetrators who use the Internet to groom children and teens for sexual exploitation. It’s been a challenge because there are so many ethical issues related to teaching children about abusers and the tactics they use, she said.

This new project will focus on developing chatbots to simulate interactions between predators and children online. These simulations can then be used to develop an educational program primarily for 11- to 15-year-olds.

- Title: Using Intelligent Conversational Agents to Empower Adolescents to be Resilient Against Cybergrooming

- Grantor: National Science Foundation

- Funding: $855,127

- Timeframe: 2024-27

Given the challenges, it’s a highly collaborative project.

Huang, assistant professor of computer science, will work on various aspects of the AI that powers the bots.

“I have a very strong interest and a strong belief in using AI techniques to solve social problems,” Huang said.

He will leverage his previous research in conversational AI, which uses Large Language Models to make the educational bots believable as humans interacting with one another.

Lee, also assistant professor of computer science, will work on compiling data to train the bots. It’s a particularly thorny issue, he said, because he will need to find data about how predators interact online and then use it responsibly.

“The challenge for this work is that there is no authentic cybergrooming conversation dataset we can use to train the chatbots,” Lee said. “We plan to approach the problem using human-centered approaches and establish an ethical platform in which adolescents and their parents can collaborate to generate such data and enhance their awareness of cybergrooming as part of the data collection process.”

Pamela Wisniewski, associate professor of computer science at Vanderbilt University and a specialist in privacy and online safety for adolescents is another collaborator. She will be working under a related $344,874 National Science Foundation grant to consult with teens and experts in cyber abuse and sex trafficking prevention to develop training materials and ensure that the chatbots are safe for minors to use.

“My hope is that we can design, develop, and evaluate a tool that can benefit teens by teaching them about the risks of cybergrooming, as well as coping mechanisms to protect themselves from these risks,” Wisniewski said. “The goal isn’t to restrict and surveil their use of the internet. Instead, we need to give them the tools needed to navigate the internet safely.”