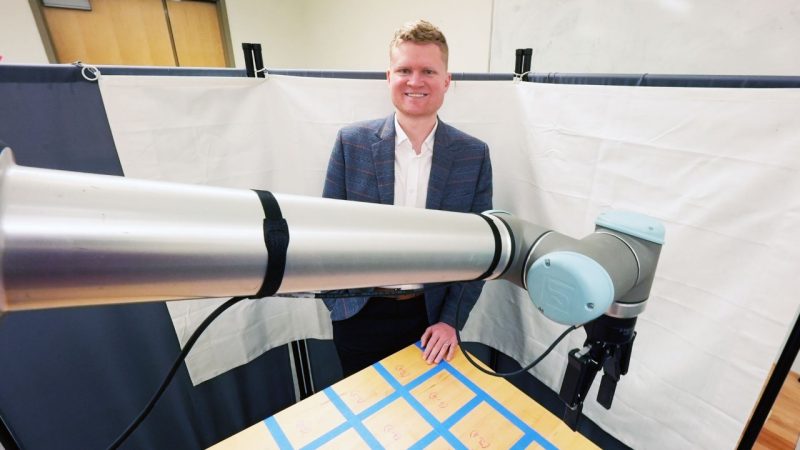

Dylan Losey receives National Science Foundation CAREER award to build assistive AI

Losey leads a team that has explored the possibilities of human-robot interaction, and now he will expand that research to develop robots that understand — and are understood by — their everyday users.

How do we get valuable robotic technology into the hands of people who need it without teaching everyone to write code?

This question is answered a little more every day in the lab of Dylan Losey, assistant professor in the Department of Mechanical Engineering. His team has focused its talents on building collaboration between robots and humans, composing fundamental algorithms that work beneath the surface and creating artificial intelligence (AI) that can adapt to human commands. Now he has received a five-year National Science Foundation (NSF) Faculty Early Career Development (CAREER) award to do more.

Human-robot interaction takes many forms in Losey's lab. The team has worked on assistive robot arms that help people with disabilities, autonomous vehicles that can share roads with human-driven cars, and manufacturing robots that collaborate with workers to assemble parts.

Losey thinks this technology shows incredible potential if people and machines can continue learning to work together.

“We are on the verge of robots entering our roads, homes, and everyday lives,” Losey said. “But for these robots to seamlessly interact with humans, we first need to make sure that humans and robots are on the same page, that they understand each other.”

Can we talk?

Robots learn and adapt as they work with humans. For humans and robots to get on the same page, robots need to learn what their user needs and how they should behave. But the human also needs to know what the robot is thinking — and what the robot might do next. For robots to seamlessly collaborate with everyday users, Losey envisions a two-way conversation.

“Imagine a person living with physical disabilities who relies on their assistive robot arm,” said Losey. “To get on the same page, this robot needs to learn what the user wants, such as pouring a glass of water. The human needs to know what the robot is thinking, maybe that the water is outside the arm’s workspace. Without mutual understanding, our interaction breaks down. The robot is unsure how to assist the user, and the user does not know what they should expect from the robot.”

Bridging the communication gap between human and robot means communication happening both ways, creating an accessible framework for interaction. When that framework is built, intelligent systems and everyday users can start to understand one another and solve problems together.

Tools for a wide range of users

Facilitating learning and communication between people and robots creates a more robust set of options for applying the technology. For this study, Losey’s team will create new ways to control devices, such as wheelchair-mounted robot arms for users with physical disabilities, and develop an array of communication devices using visual interfaces and touch-based technologies to accept commands.

To share this ongoing research with the community, Losey’s group will travel to the Virginia School for the Deaf and the Blind. Together, these students and researchers will explore how to build robots that are clear and understandable.

Overall, Losey sees this project as a critical step towards a unified, inclusive, and accessible framework for human-robot interaction in which intelligent systems and everyday users converge towards mutual understanding.

“Robotics and AI should not be hidden behind closed doors,” Losey said. “I want to develop systems for people that can be used by people. When you purchase a robot for your home, you should be able to teach the robot to do what you want and also understand exactly what the robot has learned from you.”